Sliding-Window Temporal Attention based Deep Learning System for Robust Sensor Modality Fusion

H. Utku Unlu, N. Patel, P. Krishnamurthy, F. Khorrami

Published in IEEE Robotics and Automation Letters - July 2019

Abstract

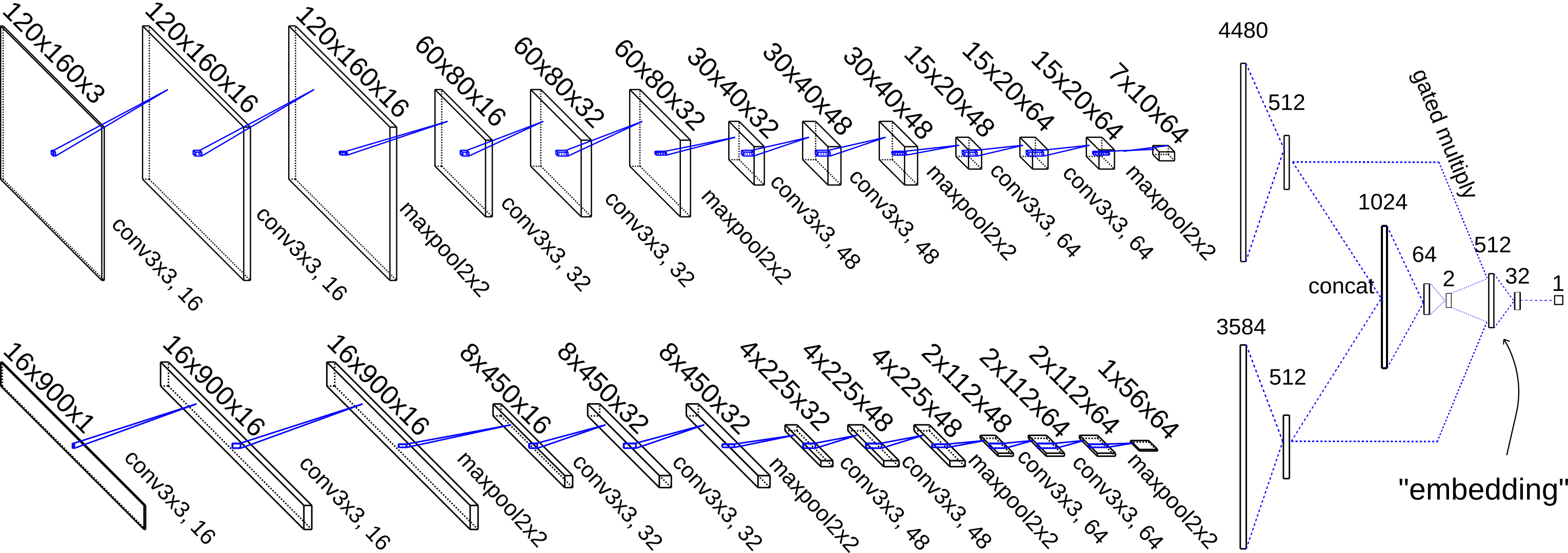

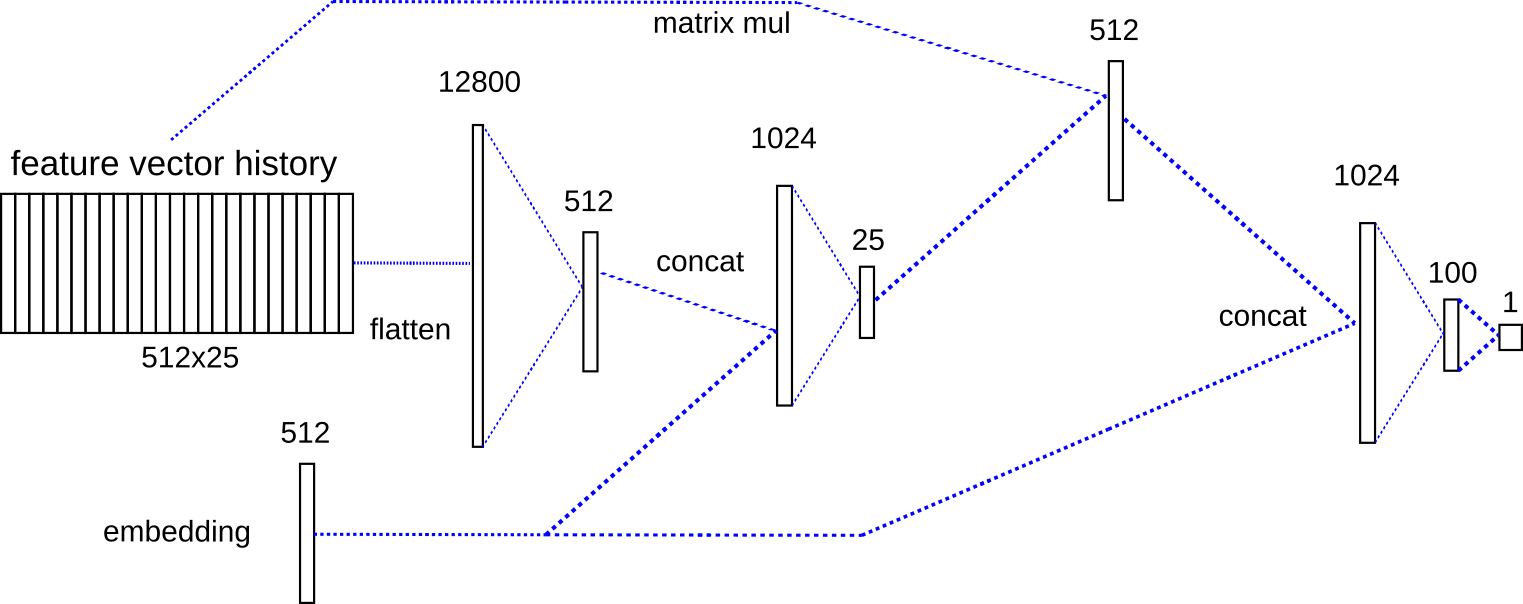

We propose a novel temporal attention based neural network architecture for robotics tasks that involve fusion of time series of sensor data, and evaluate the performance improvements in the context of autonomous navigation of unmanned ground vehicles (UGVs) in uncertain environments. The architecture generates feature vectors by fusing raw pixel and depth values collected by camera(s) and LiDAR(s), stores a history of the generated feature vectors, and incorporates the temporally attended history with current features to predict a steering command. The experimental studies show the robust performance in unknown and cluttered environments. Furthermore, the temporal attention is resilient to noise, bias, blur, and occlusions in the sensor signals. We trained the network on indoor corridor datasets (that will be publicly released) from our UGV. The datasets have LiDAR depth measurements, camera images, and human tele-operation commands.

NetGated Dropout Architecture

Sliding-Window Temporal Fusion Architecture

Video

Bibtex

@article{UnluPKK19,

author = {Halil Utku Unlu and

Naman Patel and

Prashanth Krishnamurthy and

Farshad Khorrami},

title = {Sliding-Window Temporal Attention Based Deep Learning System for Robust Sensor Modality Fusion for {UGV} Navigation},

journal = {IEEE Robotics and Automation Letters},

volume = {4},

number = {4},

pages = {4216--4223},

year = {2019}, }